How I Learned to Stop Worrying and Love Tableau on Big Data (Part 1)

Share

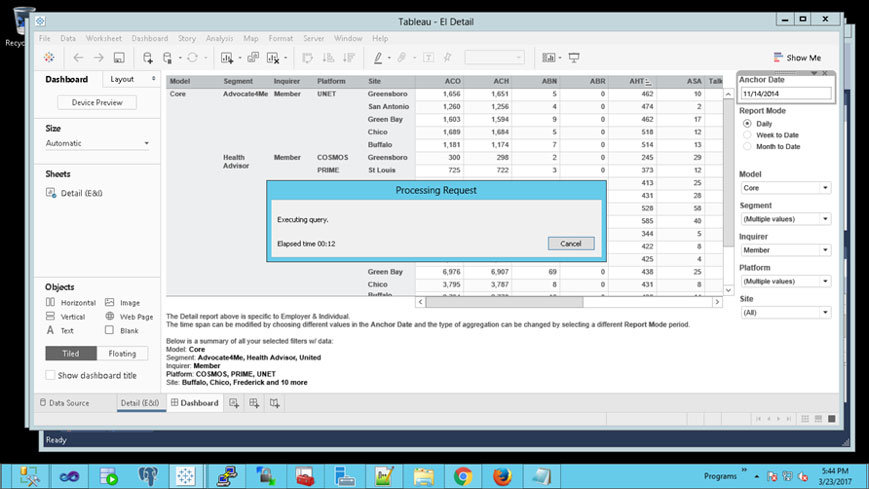

Tableau works well when you have fast data. The analysis just flows. Drop a dimension or a measure on a shelf and you get instant feedback with a visual representation of your data. It keeps you in the flow of your analysis and gives you a feel for your data. That is important because you get a better sense of the questions that you are asking and the validity of your answers. There is one situation where this flow can break down – slow queries. Tableau’s answer is an easy and robust one. Extract your data via the Tableau Data Engine or the newly released Hyper. Yes – that usually does the trick by taking the data our of the original data store, whether it is a database or a huge text file. But any in memory solution has limitations. When you get to very big data sets, hundreds of millions or billions of records, this too can break down. Also, when you need the data to be very fresh and refreshed every 10 minutes, this also is challenging. The reason for this is that every in-memory or extract solution for analytics has a limit where the refresh speed or query speed is too slow for the user to return to the flow of their analysis. This can add some frustration for what should be a frictionless experience.

Here lies the quiet challenge between Tableau and big data. Tableau makes it easy to analyze big data (and send queries) and the big data platform like Hadoop struggles to keep up. This struggle has been going on for so long it is as if no one has the time, training or inclination for strategic thought. Analytics is too important to be left for the DBAs. Likewise, performance engineering is too important to be left for the analysts.

There is a solution. One that does not require a big and expensive analytical database platform. One that does not require performance engineering to be done ahead of time to make the queries run quickly. And one that does not require an intermediate sematic layer that takes time and expertise to create. Jethro can provide fast query performance on your big data without the expense of a traditional analytical database or the effort of performance engineering or OLAP design.

We accelerate queries using three methods; Full Indexing, Auto generated cubes, and caching. The first two are the most important and the following best practices are designed to make the most of our indexes and cubes.

When is it Big Data for Tableau anyway?

If you look at the collective wisdom of the Tableau community, you will find the limits of the number of records that the Tableau Data Engine can handle is the “millions to billions”. That is a wide swath of possibility – four orders of magnitude! There is a point within the lack of resolution in the recommendation. It is difficult to calculate a prediction of the query latency or, even more important, the extract refresh time. From what I have seen, these are the two limiting factors in the utility of the Data Engine. The first factor that causes issue is the time to refresh an extract. The benchmark expectation of refresh time is about 1 million records per minute. Unfortunately, I usually do not see that speed due to the source of the data or environmental limitations. So, if you had one billion record extract, it would take over 16 hours to create. Once created, the extract may perform good enough, but the creation time makes the extract untenable. The extract creation time is further exacerbated by the number of columns. When the data gets large enough (100’s millions of records) data stewards will be required to limit some useful dimensions and measures from a data source. This in turn limits the usefulness for ad hoc analysis – or requires you to have multiple data extracts of very related subjects (trust me it can get confusing).

Hyper has been recently released in 10.5 and is a step in a better direction for an extract-based solution for bigger data. I hate to judge before all the facts are in, but it will not address all big data needs, especially when the data volumes are greater than a few billion records. Like any extract based solution, there will always be data that needs to be queried live because it is too big to extract.

Does the analysis change when using tableau on big data?

One thing to consider is that the analysis potential is greatly increased with big data. There are valuable insights to be found when aggregating to look at a high level, but also to drill deep down into the details and find the few thousand records out of a few billions that interest you. By freeing the limitations of data size, you can analyze over a longer period without sacrificing the analytical richness of the granular data. Modern BI platforms like Tableau enable users to not only do that but also quickly change the questions that they are asking of the data. Now all that is needed is a data platform like Jethro to automatically adjust to the changing queries and respond with automated performance changes.

How Jethro neatly addresses these kinds of analysis

Jethro has been designed from the ground up to address the challenges faced by Tableau users that need to analyze big data. Tableau is a great application to analyze your data, but the experience wanes when the query times are slow. What we have found is queries from Tableau are easy to profile at a high level and we realized that you cannot properly improve performance without multiple acceleration strategies. Jethro uses both indexes and cubes as the backbone with a sophisticated optimizer on top of it. Indexes and cubes are complimentary in nature, especially when you consider that BI queries will range from the highly aggregate and potentially to the highly granular. We also leverage Tableau’s data connections as our semantic layer. It contains all the Jethro needs to build the aggregations in our cubing technology. For more info on this see our whitepaper (http://info.jethro.io/achieving-interactive-business-intelligence-on-big-data). This is important to be aware of because you can leverage our cubes and indexes to get the best experience possible with the Tableau/Jethro stack.

Check back next week for part two of this post, Top 10 Jedi Tips for using Tableau on Jethro.