Don’t forget that fast queries can hide stale data: demystifying “Real time” and “Interactive” for big data

Share

Discussing the state of SQL on Hadoop, Merv Adrian rightly points out that “interactive queries” doesn't necessarily mean real-time or near-real-time data.

After looking at the SQL over Hadoop offerings from big data vendors, he writes:

“None of this is real-time, no matter what product branding is applied. On the continuum between batch and true real-time, these offerings fall in between – they are interactive.”

What does “real-time”, a term that is inconsistently used (and abused) often by technology vendors (along with “interactive”, “low-latency” and others), mean in the context of big data?

Real-time is often used to describe two different ideas:

- Data “freshness” - how much time has passed since the data was written into the database until we can include it in analytics results?. Terms that fit this quick analytical access to the data are real-time (immediate), near real-time (within seconds), low latency (minutes) or high latency (hours).

- Query response time - how long does it take before the user receives a response – the classic “how quick is the query” question. Terms that fit here are immediate (real-time event response), interactive (seconds), “quick” (minutes) and batch (hours).

Different applications act differently with regards to the matrix of “response time” vs “data freshness”:

- Event processing – e.g. stock trading - uses real time data that is fresh, and requires an immediate response time.

- Dashboards are typically near real-time (i.e. the data is several minutes’ fresh) and immediate (in terms of query response time)

- Ad-hoc queries typically require quick query response times BUT may run on “fresh” data or stale, high-latency, data. This is the challenge the Merv Adrian highlights

- Canned reports and BI can tolerate slow and quick query times, and data freshness varies here too

- ETL processes are typically high latency and batch

So, “real-time” analytics can mean many different things. That’s why the first thing to do is to “assess your need for speed” as aptly mentioned in the DBMS2 blog.

It’s important not to mix “fresh data” with “query response time”. The best example is dashboards: if you’ve invested time and money to create a dashboard, you’d want it to show what was going on minutes ago, not yesterday.

Now back to big data: what Adrian is saying is that “interactive” queries – i.e. ad hoc queries with BI or visualization tools – are not necessarily “near real time”. This pinpoints an important challenge for big data analytics: the ability to perform ad-hoc queries is not enough when data isn’t fresh. Therefore, what the market needs is fast SQL queries (satisfying interaction) on near real time data (fresh data that was just written).

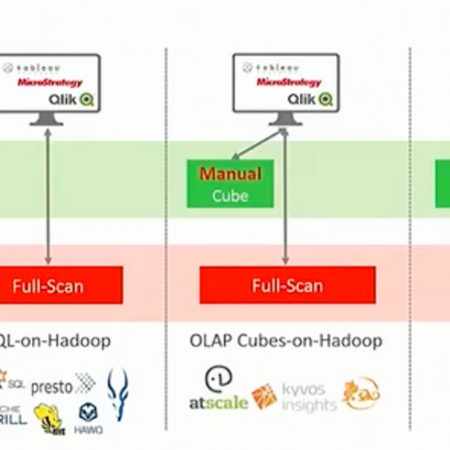

This one of the big issues underlying the state of SQL on Hadoop. While movement has been made to enable real SQL queries on Hadoop, everything is still reliant on one form or the other of batch preparation of data for analytics. This, of course, comes at a price – data isn’t fresh. So even if interactive ad-hoc queries are powered by some of the vendors touting SQL over Hadoop – the “interaction” is done on a batch of data that isn’t necessarily fresh and is certainly not real time.

So, when choosing analytics on big data remember to ask yourself how fresh should your data be, not just how fast queries are.