Share

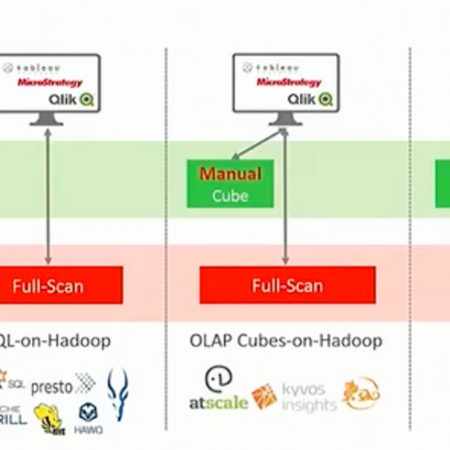

In this post, I would like to present a common optimization challenge, how it is solved in Jethro, and how some other SQL-on-Hadoop products "overcame" that challenge by manually modifying their benchmark scripts and queries to avoid the situation (which was quite a surprise for us when figured it out while running our own benchmarks).

Background

In a standard star schema, the large fact tables are joined to dimensions for filtering and lookups. Probably the most common dimension is a date dimension, as almost all business query are bounded by a time period. As an example, in TPCDS benchmark, many queries access the fact table store_sales and filter its content by joining to date_dim dimension.

For example, any query of store sales that limits results to December 2000 will look like:

SELECT (...)

FROM store_sales JOIN date_dim ON ss_sold_date_sk = d_date_sk (...)

WHERE d_year = 2000 and d_moy = 12 (...)

(... GROUP BY ... ORDER BY ...)

Since store_sales is a big fact table, it makes sense to partition it by time (which is represented as the date key - ss_sold_date_sk). As discussed in our previous post, in Jethro you can just partition the store_sales table on every day or 30 days or 365 days etc, usings s_sold_date_sk column. However, you can't do that in Hive and Impala as they do not support range partitioning. With Impala, Cloudera decided to partition by the ss_sold_date_sk column - leading to almost 2000 small partitions. With Hive, Hortonworks decided to add another string column to represent the year + month + day combination, leading to the same outcome (many small partitions).

After understanding that, the question arises- how can the query execution engine leverage the table partitioning to skip accessing partitions not needed by the query (partition pruning)?

Both Impala and Hive can do partition pruning only at query parse time. So, when the list of relevant partitions is based on the outcome of the join to date_dim table, they just can't do it. So, each company decided to manually rewrite the benchmark SQLs to add explicit, tailored WHERE condition:

Cloudera/Impala- Q19 - ss_sold_date_sk between 2451484 and 2451513-- partition key filter

Hortonworks/Hive - Q19 - ss_sold_date between '1999-11-01' and '1999-11-31'

Now, I'm sure each company must have noted it somewhere in their communication... Still, that hand-tailored SQL is far from what real-world SQLs are. Specifically, standard BI tools are very unlikely to know how to rewrite their SQL from a filter on date_dim table (for example - all holidays in a specific year) to add that specific condition on the fact table (store_sales).

Jethro Solution

In Jethro, we handle this situation automatically. At first we thought to add explicit dynamic partition pruning feature. However, we realized that our existing star transformation optimization automatically handles this case. With star transformation, filters on a dimension table are transformed into an IN predicate on the fact table. For example, using the SQL fragment from the beginning of the post, star transformation will add the following condition to the query (and optionally remove the join if possible):

WHERE ss_sold_date_sk IN

(SELECT d_date_sk FROM date_dim

WHERE d_year = 2000 and d_moy = 12)

After the transformation, our code automatically filters the unneeded partitions based on the IN condition on the partition key column ss_sold_date_sk.

Bottom line: by focusing on optimizing SQLs that are generated by common BI tools, the Jethro engine avoids common pitfalls.

Want to know more - check out our documentation or try it out yourself: Download Jethro