Share

Database Architectures for Business Intelligence Demystified: A Concise Comparison

Introduction

The year 2008 was one of drastic change in the world economy and in society. Major financial institutions collapsed, while Internet social networking service providers such as Facebook and Twitter (and later Uber and WhatsApp) grew exponentially.

This resulted in enormous changes in the database (DB) arena that had been based on the RDBMS (Relational Database Management System) paradigm since the late 1970s. This paradigm ensured high consistency and data integrity on the one hand, and high availability on the other hand, and was popular within the enterprise world, especially within financial institutions that could afford the high maintenance costs and effort required to scale up their databases.

However the new social media companies required something drastically different. The new service providers preferred high-availability, and the ability to scale their networks with low CapEx and OpEx in terms of hardware costs and personnel.

Data stores cannot support availability, consistency and partitioning (scaling) simultaneously (according to the CAP theorem), and this provided an opportunity for new radically different data storage products that began leading in many segments.

But are the new products really significant, and should you use them? And, most importantly, do they work with the leading Business Intelligence (BI) tools in the market? In this paper we explore these questions and provide clear guidelines as to which products we recommend, and for what purpose.

What data stores should you consider?

Data stores only become relevant when people and organizations use them. Important considerations when choosing a data store include the ease of report generation and analysis, and whether it is supported by Business Intelligence (BI) tools like Tableau, Qlik or Microstrategy. When a BI software vendor invests significant resources to support a data store then this is a good indication that the data store is worth considering.

Most of us are familiar with the most common RDBMS systems such as Oracle, MySQL, Microsoft SQL Server, IBM DB2, and Sybase ASE that still lead the data store market today (see db-engines.com ranking scores), however there are new data stores on the rise today.

According to our analysis, and data from the DB-Engines knowledge base, the market share of columnar databases (Cassandra, HPE Vertica, Sybase IQ, MonetDB, and SAP HANA), MPP (Massively Parallel Processing) databases (RedShift, IBM Netezza, Pivotal Greenplum, Teradata, Snowflake,and Hadoop Hive), search engines (Splunk, Solr, Attivio), and document data stores (MongoDB, Couchbase, MarkLogic) are gaining significant market share and are coming mainstream.

So what is causing this dramatic shift? Why have so many new data store categories been created in the last 10 years, and what business purposes do they serve? We will provide some possible answers to these questions below.

RDBMS: Relational Database Management System

Short intro

RDBMS systems are probably the most solid data store solution to date, and have been at the heart of the most common information storage solutions since the 1970s. The design of RDBMS systems best fits enterprise requirements where no transactions can afford to be lost, and high availability is a priority.

Leading products in this category

The leading products in this category are Oracle DB, MySQL, Microsoft SQL Server, IBM DB2. Sybase ASE and Postgres.

Key architecture

- Presents data to the user as relationships between entities. Each entity is presented in a tabular format and each table consists of a set of rows and columns.

- Provides relational operators such as JOIN and UNION to manipulate the data in its tabular format

- The most common standardized query language is ANSI SQL, although most vendors use extensions to this standard

- Data is saved fully normalized

Strengths

- Strong consistency of the data

- Includes mature High Availability (HA) solutions

- Minimal redundancy of data

- Independence from the physical data, since it can be stored using various storage engines, and can also be retrieved in the same way

Weaknesses

- Poor representation of 'real world' entities, and requires substantial efforts by software engineers to transform the object-oriented data to an entity model

- A nonlinear and complex scaling solution

- High Database Administration (DBA) and maintenance costs

- Difficult to represent complex data types using RDBMS as there is a data structure

- In large data stores it is difficult to change the table structure without causing major downtime, or a slowdown in the system

- No support for recursive queries. This limits support for hierarchical data models such as organization structure

Common use cases

- Financial transaction systems

- Low volume inter-organization data entry systems

RDBMS and BI

For decades BI tools have been designed to work with RDBMS systems that support JOIN between tables, and SQL, however ‘Big data’ RDBMS often fail to scale resulting in poor BI performance.

Tidbit

F. Codd, from IBM, invented the term "relational database" in 1970. He introduced the term in a paper he published: "A Relational Model of Data for Large Shared Data Banks".

Document Data Stores

Short intro

Document data stores are the heart of many modern information systems. Software engineers love the many useful features of document data stores such as built-in sharing, easy-to-setup clusters, end-to-end support for JSON and rapid deployment that avoids the need for a DBA in the initial phase.

Leading products in this category

The leading products in this category are MongoDB, Couchbase, and MarkLogic.

Key architecture

Document data stores save data as JSON-like documents in collections/tables. The document structure is flexible and every document can have different fields. For this reason, when you want to add new fields you do not need to make any change in existing documents or table structure. Data can be retrieved easily from the data store using predefined indexes.

Strengths

- Support rapid development, since software engineers only need to serialize data for data persistence

- Schema-less design enables support of new fields in a populated table without downtime or effects on the user experience.

- Data is stored in the JSON format that is completely fit for full stack Javascript platforms

- High insertion rates due to built-in shards

- Very easy to setup and maintain. Operational cost is usually 10% of a RDBMS system of the same size

Weaknesses

- Poor support of JOIN capabilities between entities

- If the document structure is complex, it is difficult to use BI tools and structured queries

- Unlike RDBMS systems that assign default values to fields and have data validation rules, when there is no schema these tasks must be managed at the application level.

Common use cases

- Content Management

- Catalog systems

- Analytics systems

- Billing systems where mediation can be avoided

Document Data Stores and BI

Most BI tools have only recently begun supporting document data stores, since most of the data stores did not support SQL. Even today, the lack of JOIN operations between entities still limits the type of analysis and dashboards you can create based on these document data stores.

Tidbit

MongoDB is considered the 4th most widespread and widely-used data store according to the db-engines.com knowledge base, just behind the 3 RDBMS products that lead the market (Oracle, MySQL and Microsoft SQL Server).

Columnar Databases

Short intro

Columnar databases store data in indexes and enable near real-time query response, and high insertion rates of 1 GB/s. The databases support SQL and are efficient in terms of storage and memory usage.

Leading products in this category

The leading products in this category are Cassandra/DataStax, HPE Vertica, Jethro, Sybase IQ, MonetDB, and SAP HANA.

Key architecture

Columnar databases store data as columns rather than as rows. They are designed to retrieve specific columns quickly without the need to retrieve the complete row, and discard non-relevant fields. Data is stored in indexes, rather on data tables, and therefore there is no need to retrieve data from the tables themselves.

Strengths

- Storage and memory use is efficient as every column is encoded and values are stored in index nodes

- Near real-time access to data (1-2 ms for read/write), based on data on index leaves

- Standard SQL is nearly fully supported

Weaknesses

- Data is modeled for specific queries

- If multiple queries are needed from the same data, then the data may need to be stored twice

- The SELECT * FROM pattern that is widely used in software programming should be avoided otherwise it may impact performance

Common use cases

- Authentication systems that can verify a user in O(1)

- Personalization systems that can return user data in O(1)

- Real-time bidding systems and online exchanges

- Real-time analytics where the insert rate is a key requirement

- Large data sets that exceed 1 TB in a single table/entity

- Retail systems where branch independence is key. Columnar databases allow users to read/write to each node even when the network between a retail branch and its headquarters is cut off

- Financial transaction real-time risk management systems

Indexes

Columnar databases are based on indexes. The indexes enable fast access to data by creating a tree-like search pattern where every node serves as a decision point, avoiding searching half of the remaining items in the table. However, indexes best serve specific queries that fit the specific index, and this may result in poor performance for other queries.

Columnar Databases and BI

Most columnar databases support SQL, and some support JOIN operations. These features position them as the best candidates for BI on “Big Data.” However, since data must be designed for specific queries, it is difficult to provide flexible queries for BI analysts.

Tidbit

Jethro, that provides a columnar database based on Hadoop, has solved the low-flexibility challenge of columnar databases (and indexes in general) by integrating search indexing (see below) into its solution. This makes Jethro the best fit for ‘big data’ and BI cases.

MPP: Massively Parallel Processing Data Stores

Short intro

MPP provides rapid and powerful analytics on petabyte-scale data volumes. This category used to be known as the data warehouse appliances category, and is specialized in enabling ad-hoc queries on large-scale data repositories for long-term analytics.

Leading products in this category

The leading products in this category are Amazon Redshift, IBM Netezza, Pivotal Greenplum, Teradata, Snowflake, Google BigQuery, Hadoop Impala, Hadoop Hive, Hadoop Drill, Hadoop Hbase, Facebook Presto, Spark SQL, MemSQL, EXASolution, and Kognitio.

Key architecture

- Shared (shared-nothing) architecture, where the entire query is executed on the nodes with emphasis on minimizing data movement

- Data is stored on different nodes

- Queries are split into different nodes, and only summarized and compacted results are returned from each

Strengths

- The data architecture is flexible enough to support any query with a dedicated index, or table model design

- Supports petabyte-level data requirements

- Supports the requirements of the modern offline machine-learning model

Weaknesses

- Poor support for real-time and near real-time use cases as query results can take between several seconds and several hours

- BI queries require massive scanning of data, and result in high utilization of server resources..

- Requires dedicated hardware, or large-size installations. MPP is not recommended for installations with less than 10 TB of data, or those with more than 100 TB of data.

- Scalability is limited (a maximum of 100-200 nodes)

Common use cases

- Large data warehouses with over 10 TB of data

- Long-term analytics

- Ad-hoc queries against that data

- Enterprise level data lakes

- Data store for training machine learning systems and models

Cubes

Cubes are multi-dimensional tables such as period, product and city, where data can be easily sliced and summarized according to one or more the dimensions. As cubes consist of large normalized tables, they are best suited for data warehouses.

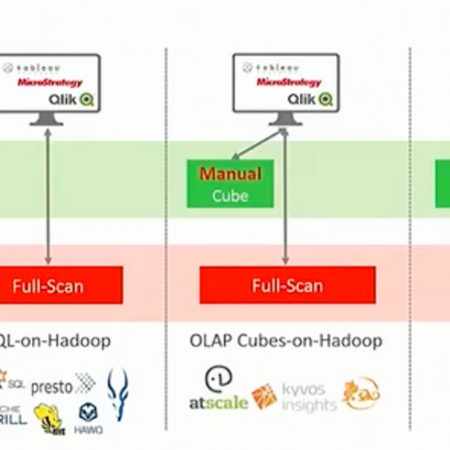

MPP and BI

MPP provides high flexibility that enables BI analysts create any query they want, and many of them support SQL. However, MPP data stores often scan massive amounts of data in order to return results, and this may result in high latency, and extensive usage of server resources (CPU, RAM and disk).

Tidbit

IBM Netezza is sold as a dedicated appliance that includes FPGA to augment CPUs and minimize network bus traffic.

Search Engines

Short intro

The search engine (also known as Google) is the heart of the internet. As enterprise software and SaaS become more content aware, and support larger data sets, the use of internal search engines to locate relevant data, or create context and geolocation-aware results, is coming mainstream.

Leading products in this category

The leading products in this category are Splunk, Solr, and Attivio.

Key architecture

During the ETL (Extract, Transform, and Load) phase, search engines analyze the input data and create terms and frequencies. These frequencies serve as a basis for indexes and inverse indexes that enable searching of the data modeled as documents, including fields and attributes such as geolocation.

Strengths

- Support for full-text searches, where users can query any field and get results in near real-time

- Support for spelling corrections

- Custom search models, where the order of results can be boosted based on given attributes (such as distance from a given location)

Weaknesses

- Search engines are not designed to be a primary data source

- Results may not be 100% accurate and may not be repeatable

- Results may depend upon the hardware load (RAM, CPU and disk utilization)

- Data load is CPU intensive

Common use cases

- Log aggregation and analysis

- Content management system internal search capabilities

- Internal website search

- Auto-completion and support for fuzzy searches (spelling mistakes)

- Location-based systems that adjust search results, and offers results based on the user location

Search Engines and BI

Most search engines do not support SQL or JOIN operations much like document data stores. This results in limitations in the type of analysis and dashboards you can create based on these data stores.

Tidbit

Although Apache Lucene is supported by only a few BI vendors, it is actually a key component in the fast growing search products Solr and Elasticsearch.

Summary

Various business requirements have resulted in major changes in the database and data-store landscape in the past 10 years. From an industry with only a single technical solution, the glorified RDBMS (that was best fit for enterprises), there are now a wide range of products each fit for a different business requirement. These products now match enterprise requirements, and are supported by leading BI providers.