Share

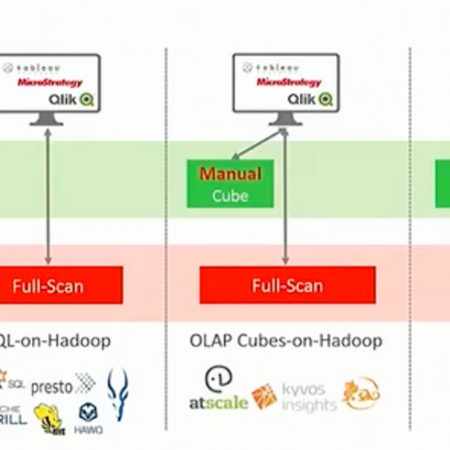

In my previous post, I explained how partitioning works in Jethro. In this post, I would like to explain how partitioning was implemented in Hive and Impala, why their design is very problematic, and how our implementation avoids those problems. Design matters!

Partitioning in Hive / Impala

In Hive and Impala, a partition is a directory of files. The partition column attribute is embedded in the directory name, so all rows in the same partition share the partition key. In other words, each partition covers a single value of the partition key. As we will see, that design has some unfortunate consequences,

Limitation - Partition Key Must Be Low Cardinality

A good partitioning key is one that helps in two scenarios. First, it should be a key that is used in most of the queries to enable partition pruning - automatically skipping irrelevant partitions. Second, it should be used as a basis for rolling window maintenance - like purging old data once a month. For example, most very large tables (fact tables) store an event per row, like phone calls, page views etc, so the most natural key for partitioning is the event timestamp. In that case, partitioning is a physical grouping of rows (events) per an interval of the event time (hourly or daily or monthly etc).

However, since Hive and Impala are limited to a single value per partition, users can not partition by the event timestamp column, as that will create millions of small directories and files (one per second or millisecond), which will hurt query performance and may also overwhelm HDFS. Even if the event timestamp is less granular (at the minute, hour or day level), it may make sense to partition the data at a different level.

For example, the TPC-DS benchmark generates five years of data. Even though the fact table has a day-level column, it may still make sense to partition by month (or quarter or year), as daily partitions leads to about 1800 small partitions (or about 20 million rows per day for 15TB scale factor).

So, as a workaround, in Hive and Impala you have to generate a separate column(s) from the event timestamp to represent the partition key and adapt your ETL scripts accordingly. Some people pick a string or a number that represents the day (like 20140925 or '2014-09-25'), others pick a set of separate columns like year, month, and day columns for multi-level partitioning.

As an example for that, in Hortonworks TPC-DS benchmark kit for Hive, they have modified the benchmark - added to the fact table store_sales an extra string column called ss_sold_date and rewrote the queries to explicitly use that column! In Cloudera TPC-DS benchmark kit they also manually rewrote the SQLs to explicitly refer to a range of partition keys (without adding an extra column). That is understandable due to their limited partitioning implementation (I'm sure both companies have mentioned this modification somewhere in the fine print). That it leads us to the second problem:

Limitation - Must Manually Rewrite All Queries To Use The Partition Key

When there is a separate partition key column, users that ask questions must manually rewritetheir SQL to include the partition key, so the database could do partition pruning. For example, maybe your query searched for data from the last eight hours: event_ts_column >= now() - 8*60*60. If you use a separate partition key column, you must add it to your query as well: event_date_string >= '2014-05-24'. This is a manual, error-prone work for every SQL statement that users write. Of course, BI tools can't generate this condition automatically, so users of those tools must always add both conditions and change them in tandem.

Partitioning in Jethro

As discussed in the previous post, Jethro implements range partitioning for timestamp and numeric columns. This means that you do not need to generate any extra column for the partition key. You can simply partition your large fact tables based on the event timestamp, while picking the interval (every four hours or every day or every month etc). Jethro partitioning is transparent to the users and BI tools - we don't burden them with the responsibility to add extra conditions for each SQL to make it fast. It just works.

Want to know more? Download our product and try it out yourself.